NVIDIA H200 NVL 141GB PCIE GPU Tăng tốc GPU (Phần số 900-21010-0040-000)

Thở phào nhẹ nhõm. Chấp nhận trả lại.

Vận chuyển: Vận chuyển quốc tế của các sản phẩm có thể bị xử lý hải quan và các khoản phí bổ sung. Xem chi tiết

Giao hàng: Vui lòng để thêm thời gian nếu giao hàng quốc tế phải qua xử lý hải quan. Xem chi tiết

Hoàn trả: Chính sách hoàn trả trong vòng 14 ngày. Người bán trả phí vận chuyển trả lại. Xem chi tiết

Vận chuyển miễn phí. Chúng tôi chấp nhận Đặt hàng NET 30 Ngày. Nhận quyết định trong vài giây, mà không ảnh hưởng đến tín dụng của bạn.

Nếu bạn cần một số lượng lớn sản phẩm NVIDIA H200 NVL 141GB - gọi cho chúng tôi qua số điện thoại miễn phí Whatsapp: (+86) 151-0113-5020 hoặc yêu cầu báo giá qua trò chuyện trực tiếp và quản lý bán hàng của chúng tôi sẽ liên hệ với bạn sớm.

Title

NVIDIA H200 NVL 141GB PCIe GPU Accelerator (Part Number 900-21010-0040-000) for Generative AI & HPC

Keywords

NVIDIA H200 NVL, 141GB HBM3e memory, PCIe Gen5 x16 accelerator, AI inference GPU, Hopper architecture GPU, buy H200 NVL, enterprise GPU accelerator, 900-21010-0040-000Description

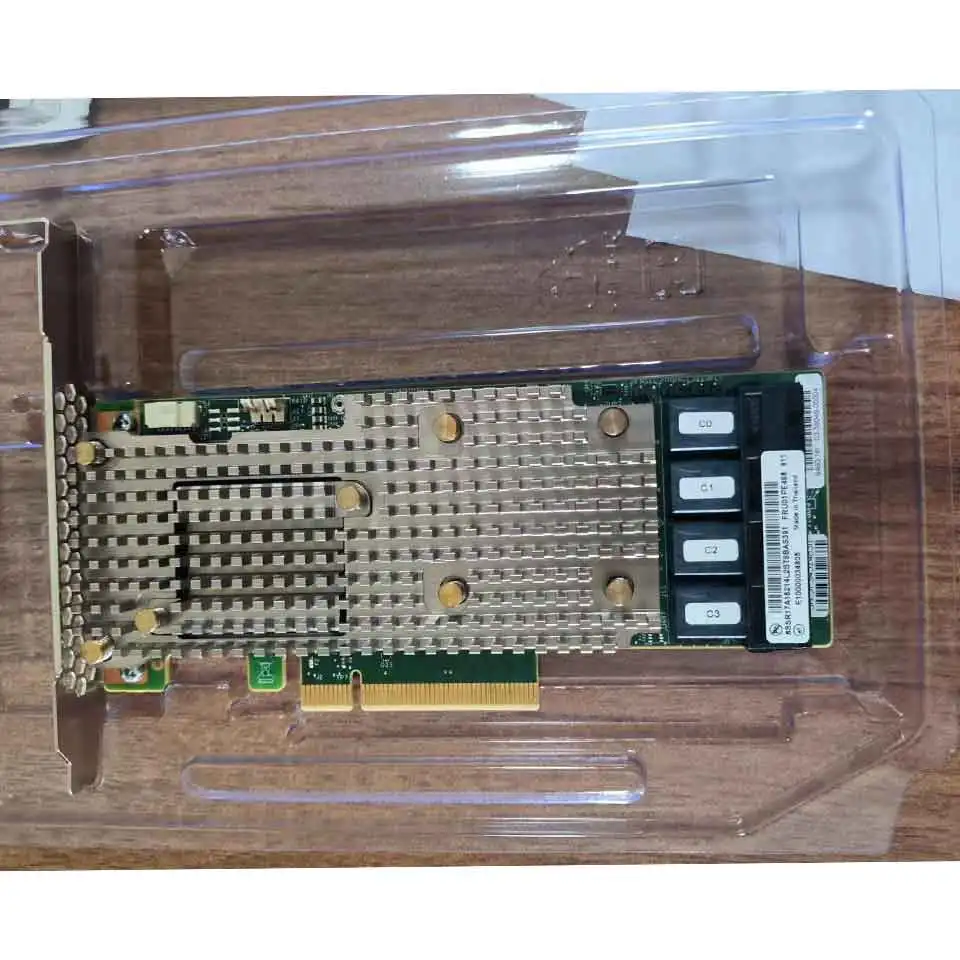

The NVIDIA H200 NVL (PN 900-21010-0040-000) is a premium GPU accelerator card built on NVIDIA’s Hopper architecture. It features **141 GB of HBM3e** ultra-fast memory with **4.8 TB/s memory bandwidth**, delivering exceptional performance for generative AI inference, large language models (LLMs), and high-performance computing (HPC) workloads.

The PCIe Gen5 ×16 interface allows this accelerator to be deployed in a wide range of servers that support modern PCIe slots, providing high throughput and compatibility. With part number 900-21010-0040-000, this unit is ideal for OEM integrators or existing GPU server upgrades.

Its design supports multiple instances per GPU via NVIDIA’s MIG-style partitioning, enabling flexible usage for multi-tenant inference, virtualized deployment, or fine-tuning smaller models. The higher memory and bandwidth make it well-suited to handle larger model weights and data without frequent data swaps.

Power requirements are significant: TDP can go up to ~600–700W depending on configuration and cooling. Ensure that server power delivery, cooling, and form factor can support this module.

For enterprises planning to buy H200 NVL, this card represents a major leap forward over previous-generation GPUs in memory capacity, inference throughput, and overall efficiency for AI and HPC tasks. It’s especially valuable for inference farms, model serving, large-scale data science, or multi-GPU cluster use.

Key Features

- 141 GB of HBM3e GPU memory with **4.8 TB/s memory bandwidth** for high throughput. 141GB HBM3e memory

- Supports PCIe Gen5 ×16 form factor for broad compatibility. PCIe Gen5 x16 accelerator

- High precision and mixed precision support: FP64, FP32, TF32, BFLOAT16, FP16, INT8, FP8, with Tensor Cores to accelerate deep learning and scientific computing.

- Multi-Instance GPU (MIG-style) support for partitioning resources per task or user, enabling flexible usage.

- High interconnect options: NVLink or NVLink bridge in multi-GPU setups to allow very high bandwidth between GPUs.

- Passive or air-cooled PCIe form (in NVL version) enabling deployment in standard server racks without liquid cooling for certain configurations.

Configuration

| Component | Specification / Details |

|---|---|

| Part Number / Model | NVIDIA H200 NVL – PN 900-21010-0040-000 |

| GPU Memory | 141 GB HBM3e |

| Memory Bandwidth | ≈ 4.8 TB/s |

| Interface | PCI Express Gen5 ×16 (NVL passive PCIe form) |

| Precision & Compute | FP64, FP32, TF32, BFLOAT16, FP16, INT8, FP8; Tensor Cores included. |

| Multi-Instance Support | Up to 7 MIGs / instances depending on configuration. |

| Power Consumption | Up to ~600–700 W depending on workload / cooling. |

| Cooling & Form Factor | Passive/air-cooled PCIe form (NVL version) or SXM boards in some systems with NVLink. |

| Interconnect / Bridges | NVLink bridges for multi-GPU, PCIe Gen5 for host I/O. |

| Supported Software / Stack | NVIDIA AI Enterprise, CUDA, cuDNN, TensorRT, ONNX, etc. |

Compatibility

The NVIDIA H200 NVL requires a server with a free PCIe Gen5 x16 slot and sufficient power delivery capacity (600-700W headroom), robust cooling (air flow, fan capacity), and a compatible BIOS / firmware that supports recent NVIDIA drivers.

It is supported in many NVIDIA-Certified systems, including those from Lenovo ThinkSystem, Dell, and other OEMs that list H200 NVL as a certified option. LGX / MGX / HGX NVLink options exist where NVLink bridges are used for multi-GPU setups.

Software compatibility includes Linux distributions like Ubuntu 24.04 LTS, Red Hat Enterprise Linux 9.x, and others; drivers via NVIDIA CUDA / AI Enterprise stack. Verify that your kernel and OS support the Hopper architecture and necessary driver versions.

Usage Scenarios

1) Large Language Model (LLM) Inference & Serving: With 141 GB memory and high bandwidth, the H200 NVL can serve large-parameter models with fewer memory bottlenecks, enabling faster inference and reduced context-switch overhead. Ideal for AI-as-a-service or APIs.

2) High-Performance Computing (HPC) and Scientific Simulations: Workloads involving FP64, large matrix operations, computational fluid dynamics, and climate modeling will benefit from the Hopper architecture and HBM3e bandwidth.

3) AI Training (Fine-Tuning) & Mixed Precision Workloads: While full training of massive models might use SXM versions, the NVL form is excellent for fine-tuning or mixed precision training, and offers good memory bandwidth and size.

4) Data Center GPU Cloud Infrastructure: In multi-tenant GPU clouds, the ability to partition the H200 into multiple instances (MIG/other partitioning) helps allocate GPU resources efficiently. Also useful for GPU aggregation nodes.

5) Graphics / Rendering / Visualization: For high resolution rendering, 3D simulation, VR/AR content, scientific visualization, the large memory buffer and high bandwidth can hold large datasets and texture assets.

Frequently Asked Questions

-

Q: What is the difference between H200 NVL and H200 SXM / HGX versions?

A: The NVL version is a PCIe form-factor variant; it tends to have passive or air cooling and is designed for broader server compatibility via PCIe Gen5. SXM/HGX versions offer interconnects like NVLink/HGX boards, higher power envelope and often better cooling and multi-GPU scaling. -

Q: How many GPU partitions (MIG instances) can I run on the H200 NVL?

A: Up to **7 MIG-style instances** are supported depending on configuration; each instance gets ~16.5 GB memory in some partitioning modes. This enables multi-tenant or multi-workload deployment. -

Q: What server power supply & cooling should I plan for when installing this GPU?

A: Plan for at least **600-700W** headroom for this GPU under load, plus sufficient cooling in the chassis. Ensure PCIe slot has proper support, airflow is unobstructed, and power connectors meet NVIDIA specifications. -

Q: Will the GPU memory be fully usable for large model inference without offloading?

A: Yes—the 141 GB HBM3e provides a large contiguous memory pool, reducing need for offloading or frequent data movement. But actual usable capacity depends on model size, precision, batch size, and any memory reserved by the system or driver overhead. Mixed precision (FP16/FP8) can also help fit larger models.

SẢN PHẨM LIÊN QUAN ĐẾN MẶT HÀNG NÀY

-

Mô-đun nâng cấp kết nối kênh sợi quang doanh nghiệ... - Số hiệu sản phẩm: 405-ABBH...

- Tình trạng:In Stock

- Tình trạng:Mới hoàn toàn

- Giá niêm yết là:$3,299.00

- Giá của bạn: $2,499.00

- Bạn tiết kiệm được $800.00

- Chat ngay Gửi email

-

Dell EMC Unity XT D4122F 2U 25 × 2,5 inch DAE (12 ... - Số hiệu sản phẩm: D4122F...

- Tình trạng:In Stock

- Tình trạng:Mới hoàn toàn

- Giá niêm yết là:$3,488.00

- Giá của bạn: $2,875.00

- Bạn tiết kiệm được $613.00

- Chat ngay Gửi email

-

NVIDIA ConnectX-7 McX755106AC... - Số hiệu sản phẩm: MCX755106AC-HEAT...

- Tình trạng:In Stock

- Tình trạng:Mới hoàn toàn

- Giá niêm yết là:$1,999.00

- Giá của bạn: $1,650.00

- Bạn tiết kiệm được $349.00

- Chat ngay Gửi email

-

NVIDIA H200 NVL 141GB PCIE GPU Tăng tốc GPU (Phần ... - Số hiệu sản phẩm: NVIDIA H200 NVL 141G...

- Tình trạng:In Stock

- Tình trạng:Mới hoàn toàn

- Giá niêm yết là:$39,999.00

- Giá của bạn: $30,715.00

- Bạn tiết kiệm được $9,284.00

- Chat ngay Gửi email

-

Bộ nhớ thông minh HPE P06035-B21-64 GB DDR4-3200 M... - Số hiệu sản phẩm: P06035-B21...

- Tình trạng:In Stock

- Tình trạng:Mới hoàn toàn

- Giá niêm yết là:$599.00

- Giá của bạn: $443.00

- Bạn tiết kiệm được $156.00

- Chat ngay Gửi email

-

Lenovo ThinkSystem SR665 bo mạch chủ máy chủ - Các... - Số hiệu sản phẩm: 03GX789...

- Tình trạng:In Stock

- Tình trạng:Mới hoàn toàn

- Giá niêm yết là:$1,899.00

- Giá của bạn: $1,638.00

- Bạn tiết kiệm được $261.00

- Chat ngay Gửi email

-

Lenovo 01PF160 - ThinkSystem SR850 Systemboard Gen... - Số hiệu sản phẩm: 01PF160...

- Tình trạng:In Stock

- Tình trạng:Mới hoàn toàn

- Giá niêm yết là:$3,199.00

- Giá của bạn: $3,099.00

- Bạn tiết kiệm được $100.00

- Chat ngay Gửi email

-

Lenovo 01PF402 - ThinkSystem 450W (230V/115V) Cung... - Số hiệu sản phẩm: 01PF402...

- Tình trạng:In Stock

- Tình trạng:Mới hoàn toàn

- Giá niêm yết là:$399.00

- Giá của bạn: $299.00

- Bạn tiết kiệm được $100.00

- Chat ngay Gửi email